Chapter 9 Notes

writing output

To write messages, cat() or message() can be used. Their differences:

cat()goes to standard out andmessage()goes to standard error. When used inside of some parallel processing backends,cat()output is obscured inside of the RStudio IDE.Unless coded otherwise,

cat()uses the usual formatting whereas the default formatting formessage()is different.

For this code:

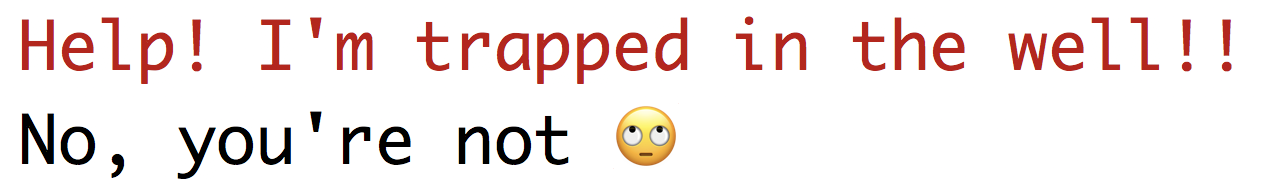

library(emojifont)

message("Help! I'm trapped in the well!!")

cat("No, you're not", emoji('roll_eyes'), "\n")the Rstudio IDE output is:

and basic terminal output is:

This post may also be helpful in deciding.

decoupling functions

For example, for some modeling method, the function foo would be the top-level api that users experience and some other function (say compute_foo_fit) is used to do the computations. This allows for different interfaces to be used to specify the model that pass common data structures to compute_foo_fit.

appropriate data structures

For example:

- Categorical data should be in factor variables (as opposed to binary or integer representations).

- Rectangular data structures that allows for mixed data types should always be used even when the envisioned application would only make sense when the data are single type.

- For strictly univariate response models, vector input is acceptable for the outcome argument.

- Censored data should follow the

survival::Survconvention.

top-level design examples

For example:

Suppose a model can only fit numeric or two-class outcomes and uses maximum likelihood. Instead of providing the user with a

distributionoption that is either “Gaussian” or “Binomial”, determine this from the type of the data object (numeric or factor) and set internally. This prevents the user from making a mistake that could haven been avoided.If a model parameter is bounded by some aspect of the data, such as the number of rows or columns, coerce bad values to this range (e.g.

mtry = min(mtry, ncol(x))) with an accompanying warning when this is critical information.

avoid common parameter names

For example, control is the type of argument used in many functions so have a function specific argument (e.g. foo_control) is advisable in these situations.

Checking of ...

The conflicted package can be used to solve this issue.

standardize names

For example, many functions use some variation of level for confidence levels (as opposed to alpha). The names in Chapter 7 are preferable for the specific context.

discussing new names

A good venue for this discussion is RStudio Community

avoid computing on call objects

Historically, the call object that results from a model fit was considered a good method to capture details of the existing model fit. This object could be parsed and manipulated for the purpose of continuing or revising the model between function calls.

This can be problematic because the call object is relative to the environment and the call itself is agnostic to its original environment. If the call is changed and re-evaluated, the current environment may be inappropriate. This could result in errors due to the required objects not being exposed in the current environment.

Note that the internals of common modeling functions, such as lm, do exactly this. However, these manipulations occur within the function call so that the environment is the same.

minimal object retention

For example, if summary statistics are computed on the model object, derive them on-the-fly during the execution of the summary method. Avoid saving these statistics to the model object unless they are related to ephemeral values generated during the model fit but do not need to be retained afterwards.

consistent number of rows

This should enable the ability to bind the results with the original data:

cbind(data, predict(model, data)) # or

predict(model, data) %>% bind_cols(data)extra tibble classes

Adding a new class to a tibble object might cause subsequent errors to dplyr operations. This code is a good example for how to maintain dplyr compatibility.

list column output

Some examples:

posterior distirbutions

If a posterior distribution is returned for each sample, each element of the list column can be a tibble with as many rows as samples in the distribution.

multiple hyperparameters

When a predict method produces values over multiple tuning parameter values (e.g. glmnet), the list column elements have rows for every tuning parameter value combination that is being changed (e.g. lambda in glmnet).

survivor probability predictions

In time to event models, where survivor probabilities are produced for values of time, the return tibble has a column for .time. Other column names should conform to the standards here (e.g. .pred_lower if intervals are also returned). As an example from ?flexsurv::flexsurvreg:

library(flexsurv)

data(ovarian)

fitg <- flexsurvreg(formula = Surv(futime, fustat) ~ age, data = ovarian, dist = "gengamma")For each new sample, this model can make probabilistic predictions at a number of user-specified time points.

preds <- summary(fitg, newdata = ovarian[1:2, "age", drop = FALSE], t = c(100, 200, 300))

preds## age=72.3315

## time est lcl ucl

## 1 100 0.7988680 0.51091386 0.9648924

## 2 200 0.4849180 0.15909221 0.7913010

## 3 300 0.2784226 0.02375807 0.6402882

##

## age=74.4932

## time est lcl ucl

## 1 100 0.7278338 0.3557049000 0.9594533

## 2 200 0.3856737 0.0408961893 0.7646142

## 3 300 0.1964611 0.0003635201 0.6010698A list of data frames is not very helpful. Better would be a data frame with a list column, where every element is a data frame with the predictions in the tidy format:

tidy_preds## # A tibble: 2 x 1

## .pred

## <named list>

## 1 <tibble [3 × 4]>

## 2 <tibble [3 × 4]># predictions on the first subject at 3 time points

tidy_preds$.pred[[1]]## # A tibble: 3 x 4

## .time .pred .pred_lower .pred_upper

## <dbl> <dbl> <dbl> <dbl>

## 1 100 0.799 0.511 0.965

## 2 200 0.485 0.159 0.791

## 3 300 0.278 0.0238 0.640If a single data frame is needed, it is easy to make the conversion:

tidyr::unnest(tidy_preds)## # A tibble: 6 x 4

## .time .pred .pred_lower .pred_upper

## <dbl> <dbl> <dbl> <dbl>

## 1 100 0.799 0.511 0.965

## 2 200 0.485 0.159 0.791

## 3 300 0.278 0.0238 0.640

## 4 100 0.728 0.356 0.959

## 5 200 0.386 0.0409 0.765

## 6 300 0.196 0.000364 0.601quantile predictions

When using a quantile regression, one might make the median the default that is predicted. If multiple percentiles are requested, then .pred would be a tibble with a column for the predictions and another for the quantile (named .quantile).

where to parallelize?

For example, if a random forest model is being created the parallelism could simultaneously fit the trees in the ensemble or parallelize the search for optimal splits within each tree. All other things being equal, the former is preferred.

controlling random numbers in parallel workers

One simple approach when parallelism is conducted using R subprocesses (e.g. via foreach or futures) is to pre-compute seeds and have a seed argument to the function being invoked. The function can immediately set the seed from the argument when it begins execution.

predictions shouldn’t depend on new_data

Sometimes spline expansions or similar terms can behave oddly when packages haven’t been fully loaded. For example:

fit <- lm(mpg ~ disp + hp + splines::ns(drat, 2), mtcars)

hp <- head(predict(fit, mtcars))

ph <- predict(fit, head(mtcars))

all.equal(hp, ph)## [1] TRUELoading the splines package fixes this:

library(splines)

fit <- lm(mpg ~ disp + hp + ns(drat, 2), mtcars)

hp <- head(predict(fit, mtcars))

ph <- predict(fit, head(mtcars))

all.equal(hp, ph)## [1] TRUEA general way to check for errors like this is to check that predict() and head() commute.

models should have meaningful classes

This makes it easier to provide methods that might be meaningfully defined for only some models accessible via the convenience interface. For example, consider generalized linear models. It makes sense to define hard class predictions for logistic regression, but not linear regression. It is easiest to define prediction methods specific to linear and logistic regression if each is represented by an R object with different classes.